After reading a bit about the RAID10 md personality, I was interested in seeing what the differnces

would be in a 2 drive setup. With standard RAID10 there wouldn’t be any difference from a RAID1

setup, however this md personality has an option called “far” copies. This needs a bit of

explaining. A normail RAID1 setup with 2 disks would look like this: [raid-1.png raid-1.thumb.png raid-10.png raid-10.thumb.png]

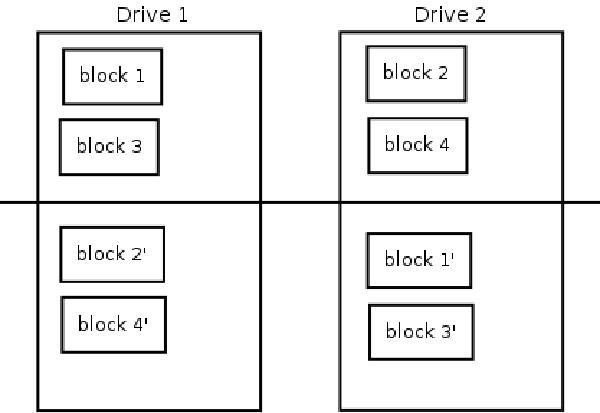

Every block has it’s copy (’) on the other drive at the same position. With the normal “near” copy layout the RAID10 personality would order the blocks in the same

way. However, with the “far” copy layout, both drives are divided in 2 parts. The copy of each

block is still located on the other drive, but at the other half of the drive. [raid-1.png raid-1.thumb.png raid-10.png raid-10.thumb.png]

The effect is still the same, each drive has all the blocks, so a drive can still fail without

impact on the data. However, the interesting part is that the first half of the drive is now

ordered as a stripe. That means there should be an improvement when reading data in this

configuration. Writing is different though. There should be a lot more seeks to place the data

on the drives, alternating between the two halves of the drive. Just to see how it holds up in real live I did a small test on my server with 2 spare partitions

on 2 different drives. First I setup a standard RAID1 with a chuncksize of 64kb and made an

ext3 filesystem. mdadm --create /dev/md7 -v --raid-devices=2 --chunk=64 --level=raid1 /dev/sdc2 /dev/sdd2

mkfs -t ext3 /dev/md7Then I ran bonnie++ on it: Version 1.96 ------Sequential Output------ --Sequential Input- --Random-

Concurrency 1 -Per Chr- --Block-- -Rewrite- -Per Chr- --Block-- --Seeks--

Machine Size K/sec %CP K/sec %CP K/sec %CP K/sec %CP K/sec %CP /sec %CP

avon 13G 301 98 57155 27 25712 12 1013 97 68186 15 199.0 13

Latency 62381us 2788ms 2435ms 23504us 244ms 1130ms

Version 1.96 ------Sequential Create------ --------Random Create--------

avon -Create-- --Read--- -Delete-- -Create-- --Read--- -Delete--

files /sec %CP /sec %CP /sec %CP /sec %CP /sec %CP /sec %CP

16 10916 27 +++++ +++ 31083 68 25761 60 +++++ +++ 31293 71

Latency 12609us 873us 922us 75818us 121us 903usThen destroy the RAID1 and make a RAID10 with far copies. mdadm --stop /dev/md7

mdadm --create /dev/md7 -v --raid-devices=2 --chunk=64 --level=raid10 -pf2 /dev/sdc2 /dev/sdd2

mkfs -t ext3 /dev/md7And again runing bonnie++: Version 1.96 ------Sequential Output------ --Sequential Input- --Random-

Concurrency 1 -Per Chr- --Block-- -Rewrite- -Per Chr- --Block-- --Seeks--

Machine Size K/sec %CP K/sec %CP K/sec %CP K/sec %CP K/sec %CP /sec %CP

avon 13G 314 98 56406 23 27385 7 1272 98 129318 18 180.4 12

Latency 57589us 4040ms 2266ms 28018us 118ms 678ms

Version 1.96 ------Sequential Create------ --------Random Create--------

avon -Create-- --Read--- -Delete-- -Create-- --Read--- -Delete--

files /sec %CP /sec %CP /sec %CP /sec %CP /sec %CP /sec %CP

16 13633 32 +++++ +++ 31356 68 28734 69 +++++ +++ 29882 65

Latency 22466us 905us 907us 17400us 127us 121usSo judging from the results the increase in read performance is there. On this size of

partition there seems to be no big difference in write performance. However, I can imagine

this getting worse when the partition size increases. Still, this is an interesting

setup to look at, especially for system drives (excluding the boot partition ofcourse,

unless you have a bootloader which supports this). |